| Author

|

Why Kurzweil synths brought human feeling to digital synthesis?

|

moki

IsraTrance Junior Member

Started Topics :

38

Posts :

1931

Posted : Mar 23, 2015 11:57:18

|

I know this could be the next production topic without any reply but I want to take the risk and two minutes to ask you something I am very interested in.

Does anyone here know why Kurzweil synthesizers are supposed to have brought human feeling to digital synthesis? In case you never heard of Kurzweil:

http://www.soundonsound.com/sos/jun07/articles/raykurzweil.htm

Kurzweil invented self composing music software as a teenager and after that optical character recognition (pattern recognition of letters and words), speech recognition, text-to-speech audio synthesis. Currently he is the director of engineering at Google and probably most famous with his rather philosophical books on singularity, transhumanism and artifical intelligence.

However, some of his earlier books describe the basics of speech synthesis and pattern recognition, which I found very interesting, because I have never read ans seen something like this before. For you this might be not such a new field...

But what is is about the Kurzweil synthesizers that is so special? Are they built and programmed in a totally different way than the others, do they synthesize differently? From what I read till now, I figured out how they synthesised the sound of a piano in a native way, different from the rest of the synths at that time, but is this still the state of the art today??

Also does anyone have more ressources on how this self composing software worked?

And did other music software and hardware giants follow this developments in the field of speech synthesis after that?

|

|

|

frisbeehead

IsraTrance Junior Member

Started Topics :

10

Posts :

1352

Posted : Mar 23, 2015 15:55

|

|

I think you already know the answer to that. If you check the accessibility options on a mac you have very clever "voice over" options, that read you what's on the screen or what you type in with the keyboard. It can also transform what you say into text, and all of this is very advanced and has seen major improvements recently with latest updates. And presumably still moving forward. Google also has similar technologies, you can use some of them online and it will presumably continue to evolve a lot to, 'cause it still feels like it's kinder garden for such technologies. |

|

|

moki

IsraTrance Junior Member

Started Topics :

38

Posts :

1931

Posted : Mar 23, 2015 16:52

|

Yes, these are features we are already very used to. Since the voice recognition software of iPhone , Siri, arrived on the market, many others followed. Today, I can't even imagine what people did before they had a voice recognition google translator on their smartphones. However, at the time Kurzweil wrote his books on technical issues, these things did not exist. Actually , at that time even an AI chess world master did not exist, computers were not that fast to rethink all possible actions of playing the chess game... I believe he even invented the basics of voice synthesis, or at least got the first engineers together. To be honest, I am extremely interested in a virtual copy of my voice talking and doing music itself  . .

However understanding these type of software does not mean that his way of synthesis has been widely adapted or even understood by musicians. Stevie Wonder was a part of the project with Kurzweil synthesizers and I guess a lot of mainstream musicians adapted this for their work, but what about electronic styles? And what about the algorithms for the self composing music. Who would understand these issues better than trance musicians?

|

|

|

frisbeehead

IsraTrance Junior Member

Started Topics :

10

Posts :

1352

Posted : Mar 23, 2015 18:50

|

If anyone could be credited for the invention of such technologies, that would be him! He was distinguished even by the American President of the time for it.

Chess has always been in the epicentre when it comes to testing AI. There's the famous episode with Kasparov, although it wasn't without a touch of controversy. But I think that threshold has been surpassed as well. Computers today can presumably beat a chess master at his own game.

I think his main contribution for musicians, though, was with technology that could suggest, like with the chess, the next possible moves and stuff that has to do with pattern recognition - speech synthesis, text to synthesis stuff, so forth and so on. I confess to not being a fan of their products, though, even at the time they were matched by serious players of the game like Roland, Korg and Yamaha. This thing with the piano, was a bet bet with Steve Wonder. No wonder Steve would show an interest, right? But sound wise, even that famous piano sound which undoubtedly was a move forward at its time can't stand next to something as trivial as round robin samples, right? If you pick one of those piano libraries from Kontakt and put it next to anything from that period to test, you'll see that besides the hype and nostalgia, there's not much else to it when it comes to recreating acoustic instruments. Those machines have a sound all their own, though, sort like other classic samplers, like Emu's and Akai stuff, 12 bit stuff, older converters with a more edgy sound...

|

|

|

moki

IsraTrance Junior Member

Started Topics :

38

Posts :

1931

Posted : Mar 24, 2015 00:03

|

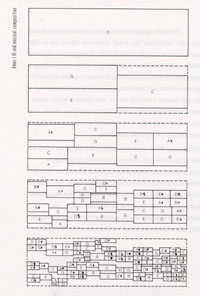

Yes, programming the statistical trends of musical behavior (the next possible moves in terms of harmonics) is one of the methods used in the sixties for self composing music.

Other methods are:

- "Rote processing" like Kemal Ebcioglu’s program for harmonizing chorales. They use top down elaboration starting from a general archetype of musical form recursively:

In one case the Ebcioglu’s program could even duplicate Bach’s own solutions...

- Searches. With this method you can actually discriminate among solutions of musical problems.

Very recommendable reading from the book Age of intelligent machines: http://www.kurzweilai.net/the-age-of-intelligent-machines-artificial-intelligence-and-musical-composition

As far as the round robin samples are concerned (I googled and found " a way to let sample developers play back a different sampled version of the same sound each time you hit the same key" ), I don't think that Kuzweil synths are weaker than that. Actually they are even able to measure the pressure you use with your fingure on the keyboard and the time it took, so the sample is different every time, but not randomly....

|

|

|

moki

IsraTrance Junior Member

Started Topics :

38

Posts :

1931

Posted : Mar 24, 2015 12:20

|

|

ansolas

IsraTrance Full Member

Started Topics :

108

Posts :

977

Posted : Mar 26, 2015 19:07

|

|

frisbeehead

IsraTrance Junior Member

Started Topics :

10

Posts :

1352

Posted : Mar 27, 2015 22:53

|

|

tons of interesting info here! thanks! keep it coming, don't stop |

|

|

moki

IsraTrance Junior Member

Started Topics :

38

Posts :

1931

Posted : Jul 11, 2015 01:50

|

Here is a link to the paper "Automated Composition in Retrospect: 1956-1986" about the beginnings of algorithmic music (see link at the end). May be you are interested to know how it began and what were the initial algorithms.

As a matter of fact algorithmic music began long before computers were invented. People usually tend to perceive algorithmic music as not creative and not mysterious enough because it was generated by a machine. Expressing emotions and a personality in an emotional frame of reference is often considered to be unique to humans and the source of all human creativity. What people forget is that emotional affects are not what makes us unique - millions of other species have them too. What makes us unique is reason. What makes us unique is for example the algorithm that some of our ancient ancestors created to use tools for making fire or bring a stone into a form.

Here is an interesting read about the history of algorithmic composition:

https://ccrma.stanford.edu/~blackrse/algorithm.html

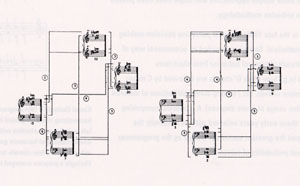

It is interesting to know that algorithmic composition was used long before computers were invented, for example the canonic composition of the 16th century:

Quote:

| The prevailing method was to write out a single voice part and to give instructions to the singers to derive the additional voices from it. The instruction or rule by which these further parts were derived was called a canon, which means 'rule' or 'law.' For example, the second voice might be instructed to sing the same melody starting a certain number of beats or measures after the original; the second voice might be an inversion of the first or it might be a retrograde |

|

Even Mozart used automated composition "assembling a number of small musical fragments, and combining them by chance, piecing together a new piece from randomly chosen parts".

I am not so familiar with the modern methods to make algorithmic music, actually I only found scientifical papers about the beginnings, the 80s and 90s. Today, I guess probability has been followed by neural network methods, but this is only an assumption. For me this would be THE algorithmic music on the edge.

May be it has beed integrated in one form or another in modern music software? Do you sometimes have the feeling that the software is writing the music instead of you? When exactly - some examples? When would you say is the moment when you would never ever be able to complete your process of production without the help of the machine?

It is strange to hear that Kurzweil brought human feeling to synthesis. Because he actually did exactly the opposite in the decades after that - he stated that there is the day to come when no human will be able to keep up and make up to date art without entering the realm of transhumanism.....So to speak, there will be a day to come, when everyone will simply need to wire up to an additional neuronal network of databases and algorithms in order to be able to keep up with human progress. To me Kurzweil is someone who really makes a fetish from human reason. So what was the point to bring human feeling to synthesis? I would say he simply brought a better auditive simulation of digital synthesis experience. Something like making virtual reality more real. But is it more human?

Back to algorithmic composition. Is it possible that algorithmic composition nowadays made way to realtime audio and visual processing? I cant elaborate much on the question because I am at the end of my post but is it possible that inducing real time visual reactions on an audio stream has become the trend subject of exploration when it comes to algorithms for generative art today?

http://www.google.de/url?sa=t&rct=j&q=&esrc=s&source=web&cd=1&cad=rja&uact=8&ved=0CCcQFjAA&url=http%3A%2F%2Fdigitalmusics.dartmouth.edu%2F~larry%2FalgoCompClass%2Freadings%2Fames%2Fames_automated_composition.pdf&ei=wUmgVbnuFcjlywOtlKHoCg&usg=AFQjCNGJl3lEFD_4ttN466G0pBMBcQFzmw&bvm=bv.97653015,d.bGQ

|

|

|

moki

IsraTrance Junior Member

Started Topics :

38

Posts :

1931

Posted : Jul 12, 2015 01:40

|

|

moki

IsraTrance Junior Member

Started Topics :

38

Posts :

1931

Posted : Jul 12, 2015 02:18

|

|

|